Duality was founded with a succinctly framed vision: “Virtual worlds for solving real problems”. Even in 2018, our founding team had deep experience building high-fidelity virtual environments — but to solve real problems, we had to engineer novel ways to bridge the real and the virtual. In other words, we had to prove we could close the sim2real gap.

Over the years, customer by customer, in diverse use cases — ranging from drone detection, to manufacturing, to off-road autonomy, and beyond — we’ve shown that Falcon’s digital twin simulation not only generates synthetic data that rivals the real data, but successfully closes gaps left in real world data collection. Rare edge cases, dangerous conditions, costly scenarios, and environments that are simply impractical to capture at scale. The result is a faster, lower-cost path to higher-performing AI models. (And you don’t have to take our word for it, you can read our award-winning papers here.)

But a second challenge remained: accessibility. Could we make Falcon’s benefits available to more teams without requiring deep specialization in 3D engines, USD file structures, and simulation workflows — skill sets that aren’t common to most AI/ML, robotics, or program teams?

In a recent blog, we explored the rapid progress of generative world models and why they feel so compelling: they offer a natural-language interface to content creation, and an impressive ability to generalize. However, at a fundamental level, generative approaches still struggle with the requirements of physical AI development. They lack accuracy, precision, introspectability, and physical consistency, making it difficult to generate reliable sensor data, enforce constraints, reproduce scenarios, or produce trustworthy ground truth. We called this the gen2real gap.

In that same post, we argued that simulation and generative approaches are not mutually exclusive. They sit on a continuous spectrum of world modeling, and we proposed that the best path forward is a hybrid workflow that combines the strengths of both. Specifically, hybrid synthetic data pipelines that leverage agentic workflows can accelerate scenario authoring and variation, while anchoring the result in a physically grounded simulation that inherently supports reproducibility, controllability, and validation.

Today, we’re excited to release that workflow as an integrated workbench for Falcon.

We call it Vibe Sim — and we can’t wait for you to try it!

Introducing Vibe Sim

What is Vibe Sim?

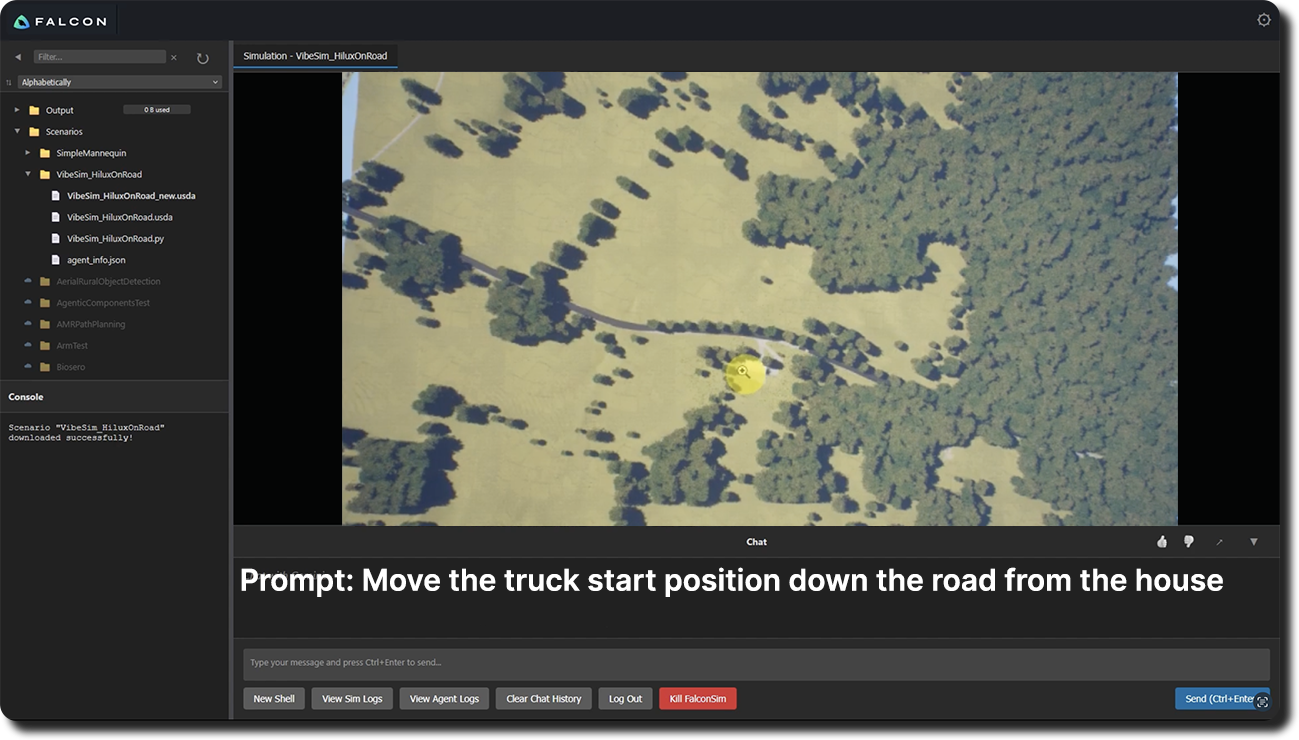

Designed with AI teams in mind, Vibe Sim combines the speed and accessibility of natural-language interaction with the accuracy and precision inherent to digital twin simulation. Instead of requiring users to manually edit USD scene graphs, author behaviors, or build scenarios in a 3D editor, Vibe Sim lets teams construct and modify simulation scenarios through an agent-driven workflow — while keeping the underlying assets, parameters, and outputs fully structured, editable, and reproducible.

With Vibe Sim, users can:

- build and modify scenarios

- adjust environment, sensor, and behavior parameters

- generate targeted synthetic datasets with ground truth

- train and evaluate model performance

- iterate quickly on failure cases

— all in the cloud, and without needing to directly manipulate files or operate a traditional 3D toolset.

The result is a workflow that dramatically expands who can use simulation effectively — enabling more teams to turn any scenario into a nearly-limitless, controllable source of synthetic data for training, testing, and validation.

How does it work?

At its core, Vibe Sim is an agentic simulation co-pilot built with FalconSim as a critical agent tool, enabling users to control, modify, and explore simulations through LLM-powered agents. Instead of requiring users to manually edit USD layers, configure scenario files, or operate a 3D editor, Vibe Sim translates natural-language intent into structured, validated simulation changes.

Vibe Sim is powered by three core technologies:

- Falcon’s Python API, which provides programmatic control over scenario composition, simulation execution, and synthetic data generation

- Duality’s USD-based Digital Twin Encapsulation Standard (DTES), which defines how digital twins, scenes, and parameters are represented in a consistent, modular format

- Google DeepMind’s Gemini 3 Pro multimodal large language model, which provides the reasoning layer for planning, tool use, and natural-language interaction

In short, Vibe Sim is implemented as a Falcon-Gemini 3 Pro multi-agent integration that translates user prompts into concrete simulation actions. Because Gemini 3 Pro has strong capability around Python and OpenUSD concepts, Vibe Sim’s agents can reliably perform tasks that normally require specialized simulation or 3D work (ex: importing digital twins, composing scenario files, adjusting parameters, configuring sensors, and setting up dataset outputs).

This workflow between the main agent, its subagents, and the various tools available to them, can be seen every time a user prompts Vibe Sim for any desired action.

As shown in the animation above, the top three icons represent agents, while the bottom four represent the tools available to the Main Agent. Every time a user prompts Vibe Sim to adjust a simulation, the Main Agent interprets the request and consults a specialized sub-agent to discover the relevant parameters, assets, or operations needed to fulfill it.

But what are the actual roles of each agent and tool?

Agents — Gemini 3 Pro LLM prompted with specific instructions and roles.

- Main Agent: Interfaces with the user, reasons through how to meet the users request, makes a plan of which tools it can leverage to fulfill the request, and calls those tools.

- Properties Agent: This agent deeply understands digital twins and scenarios. It provides the Main Agent with information about the digital twins, including semantic reasoning about the user's request (e.g. if the user asks for "a line of towers spaced at 100M intervals", they likely mean a transmission tower, not a cell tower or a castle tower). It then provides a list of reasonable twins to the main LLM. It can also provide vital information on characteristics of digital twins, for example: "what sensors are on this twin by default".

- Behaviors Agent: When the Main Agent needs to modify twin behavior — such as programming a vehicle to follow a patrol route or configuring a sensor pipeline — it delegates to this sub-agent, describing the desired behavior in natural language. The Behaviors Agent then produces validated Python code, which the Main Agent reviews and writes to the appropriate script file.

Tools — Services that can be called by the agents using the Model Context Protocol (MCP).

- Catalog: Provides access into the FalconCloud catalog. It is used by the Properties Agent for searching digital twins, and by the Main Agent for downloading them.

- DTES Parser: Manages the construction of the USD files and validation of the USD generated by the Main Agent.

- FalconSim: The simulation tool at the heart of Vibe Sim. Vitally, in this implementation, it provides the capability to start/stop/reload the process as well as to allow the reading and writing of data from a currently running simulation.

- File Manager: Gives the Main Agent access to read/write into the filesystem, to read additional information about the scenario, inspect data output, as well as all other general purpose tasks.

Let’s consider an example where a user wants to create a scenario with a specific type of vehicle in a desert environment, the Main Agent consults the Properties sub-agent to identify the appropriate digital twins from the FalconCloud catalog. It then handles the full workflow end-to-end: importing the selected assets, composing the scenario, and applying the necessary USD-based edits. The DTES Parser then validates the resulting scenario definition, enabling the system to automatically set up required file structures (including synthetic data output directories). Finally, Vibe Sim prompts the user to launch the scenario when ready.

Scenario updates follow the same pattern. If a user requests a change, such as time of day or weather conditions, the Main Agent identifies the correct parameters via the Properties sub-agent, applies the edits to the scenario definition, updates output configurations as needed, and prepares the simulation for relaunch — all from a single user prompt.

Spatial Reasoning

This release of Vibe Sim includes a Visual Reasoning Toolkit that takes advantage of the multimodal Gemini agent’s spatial reasoning ability and enables precise placements of digital twins anywhere in the scenario according to the user's prompt (ex: “place three cars on the road, with 3 meters of distance between each car”). In order to ensure accurate spatial reasoning, the agent creates a 2D map of the scenario environment — a process we’ll explore in a future blog.

Simulate, Generate Data, and Train AI Models — all in the cloud!

Vibe Sim runs entirely in the browser, with no local installation or environment setup required. Users can compose and configure scenarios, launch simulations, and generate synthetic datasets directly in the browser, without needing to manage a local Falcon install.

While Vibe Sim is designed to eliminate manual file handling, it also preserves transparency and control for advanced users. Scenario definitions, scripts, and configurations remain accessible and editable in the cloud, enabling users to inspect changes whenever needed.

To support end-to-end iteration, Vibe Sim also integrates Jupyter Notebooks, allowing users to train and evaluate vision models (including architectures such as YOLO) directly in the same environment. This makes it possible to generate data, train models, and measure performance, without ever leaving the browser.

Vibe Sim + New Falcon Licenses

Starting today, Vibe Sim is available, via request, to all current Falcon Pro users.

Along with this release we’re also introducing new Falcon licenses. The new license tiers, and their recommended use cases are detailed below:

Gen: This license is focused on Vibe Sim access, and is intended for teams of AI and robotics engineers, program managers, and ops planners who need to mine their scenarios for diverse data needs via a natural language interface.

Editor: With full access to FalconEditor powered by Unreal Engine, this license is best suited for 3D and technical artists who frequently build new digital twins, and compose completely novel scenarios. The Editor license provides all of the functionality associated with Unreal Engine plus brings additional features critical to enterprise applications, including:

- Deterministic simulation

- First party build for Linux

- Full containerization

- Vital QA and safety checks

- Enterprise-grade support from Duality’s team

Pro: Designed for teams of simulation engineers that are looking to have more granular control and largest degree of flexibility with simulations, the Pro license has been the mainstay for our current Falcon users. This license includes all Falcon tools and capabilities, including: Vibe Sim, Python API, library of high-fidelity virtual sensors, FalconEditor, and much more.

To learn more about licensing and getting started with Vibe Sim today, reach out to sales@duality.ai, or simply follow this link.

Unreal Engine 5.6

Falcon 6.1 marks the transition to Unreal Engine 5.6. Falcon is always updated to the Unreal Release one version before current. This cadence results in our customers benefiting from the richest feature set, balanced with additional QA and safety checks, which ensure optimal stability. UE 5.6 is a powerful update that brings improvements to hardware ray tracing as well as lighting systems. It introduces new engine efficiencies and streamlines world building with faster procedural tools.

UE 5.6 also brings significant improvements to MetaHuman capabilities with major upgrades across character creation, animation, and styling. MetaHuman Creator now runs directly inside Unreal Engine with a new parametric body system and improved visual fidelity, while MetaHuman Animator now supports real-time facial solving from a broader range of single-camera devices.

For more information on all UE 5.6 updates, please visit the Unreal Engine blog.

Catalog Updates

Our catalog of sim-ready, free-to-use, publicly available digital twins is always expanding with exciting new item, system and environment twins (including site twins — digital twins of real-world locations, generated via our AI-powered GIS pipeline).

With Falcon 6.1, we’re continuing to add diversity to our virtual environments with an urban site twin of Fresno, California. Like all the site twins in the FalconCloud catalog, its immediately available for any simulation.

Fresno, California

Geolocation coordinates: (36.768834, -119.758925)