Today we’re unveiling Falcon 5.4 — a release packed with major upgrades across simulation capabilities, data generation workflows, and integration pathways. Every feature in this release is designed to help physical AI teams create more accurate data, faster and with less friction. Falcon 5.4 introduces:

- Production release of the virtual SAR sensor

- New video API for integrating with world models

- Living Datasets for accessible, iterative data creation

- Fine-grained, material-based segmentation

- Containerized FalconSim for scalable, MLOps-friendly deployment

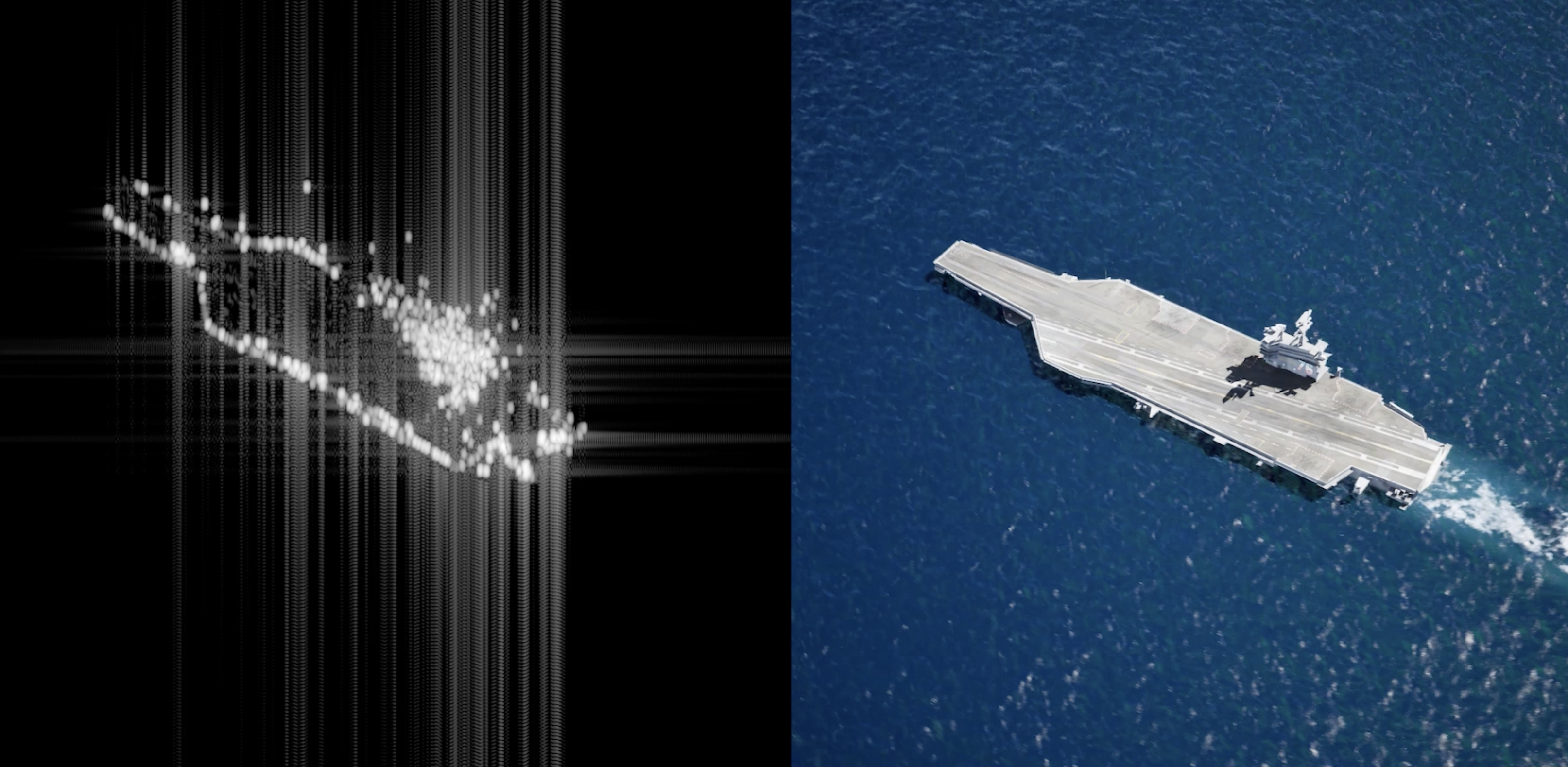

1. SAR v1.0: Production-Ready Synthetic Aperture Radar for AI, Autonomy, and Geospatial Workflows

In Falcon 5.3 we introduced our virtual Synthetic Aperture Radar sensor as a beta release, giving users a first look at our physics-based, GPU-accelerated SAR simulation integrated inside FalconEditor. With Falcon 5.4, SAR graduates to v1.0 — bringing major upgrades in radar fidelity, sensor configurability, and raw performance. This release marks the arrival of the production-ready capability for generating mission-specific SAR datasets at scale, with the accuracy required for AI perception, detection, and mapping applications.

Higher-Fidelity Radar Simulation

SAR v1.0 adds full support for stripmap and spotlight modes, enabling a wider range of operational profiles across terrestrial and marine scenarios. Users now have greater control over:

- Antenna gain for more realistic signal strength modeling.

- Beam lobe attenuation for realistic beam patterns and accurate radiometric effects that closely match real-world radar behavior.

SAR v1.0 incorporates advanced signal processing by integrating SINC and Hankel–Fourier transforms, resulting in SAR products that provide a closer match to real SAR data.

Map-Accurate Outputs

SAR v1.0 also introduces conversions designed for GIS-based workflow. These include:

- Slant-to-ground conversion transforms SAR imagery from slant range (line-of-sight distance to each point on the ground, which also visually distorts distances) into a map-based ground range representation which corrects these geometric effects.

- Orthorectification corrects the image for terrain elevation and sensor perspective, producing a map-accurate, georeferenced output.

Faster Performance

SAR v1.0 delivers dramatic performance improvements over the beta release, delivering SAR products with quarter billion raytraced signal returns in under 3 minutes. This speed enables rapid iteration and large-scale SAR dataset creation to be quickly adopted into existing engineering workflows.

2. Video API for World Models: A Bridge Between Simulation and Generative Models

World Foundation Models (WFMs) have the potential to greatly accelerate how physical AI systems are trained and deployed. But, as we’ve explored in a recent blog, these models struggle with precision and grounding — a challenge we refer to as the gen2real gap. Hybrid approaches that can combine the generality and accessibility of WFMs, with the physical accuracy of digital twin simulation offer a practical path to closing this gap. Examples include:

- Creating domain randomization by prompting time-of-day and weather changes without losing physical grounding

- Fine-tuning WFMs for domain-specific applications with targeted scenario training data

Video outputs of Falcon’s sensor streams are vital for creating this type of integration, and with Falcon 5.4, we’re introducing a critical new feature for doing just that: the Video API for World Models. The new API allows Falcon’s physically accurate sensor outputs to be exported as temporal video sequences (RGB cameras, depth, segmentation, etc.) designed to act as control nets for style-transfer models like NVIDIA Cosmos Transfer1.

Falcon + NVIDIA Cosmos Transfer1

By providing Transfer1 with Falcon’s physically-ground, multi-sensor video streams, we can produce hyper-realistic video variations using simple text prompts — dramatically expanding the diversity for perception datasets that can be generated from any single Falcon scenario. This can include variations like:

- Diverse weather changes

- Limitless lighting conditions

- Edge cases relevant to any operational scenario

All created with a text prompt and without need to build a new scenario. With Falcon and Transfer1, a single simulated scenario can instantly become hundreds of photorealistic variants, giving teams the breadth without sacrificing realism.

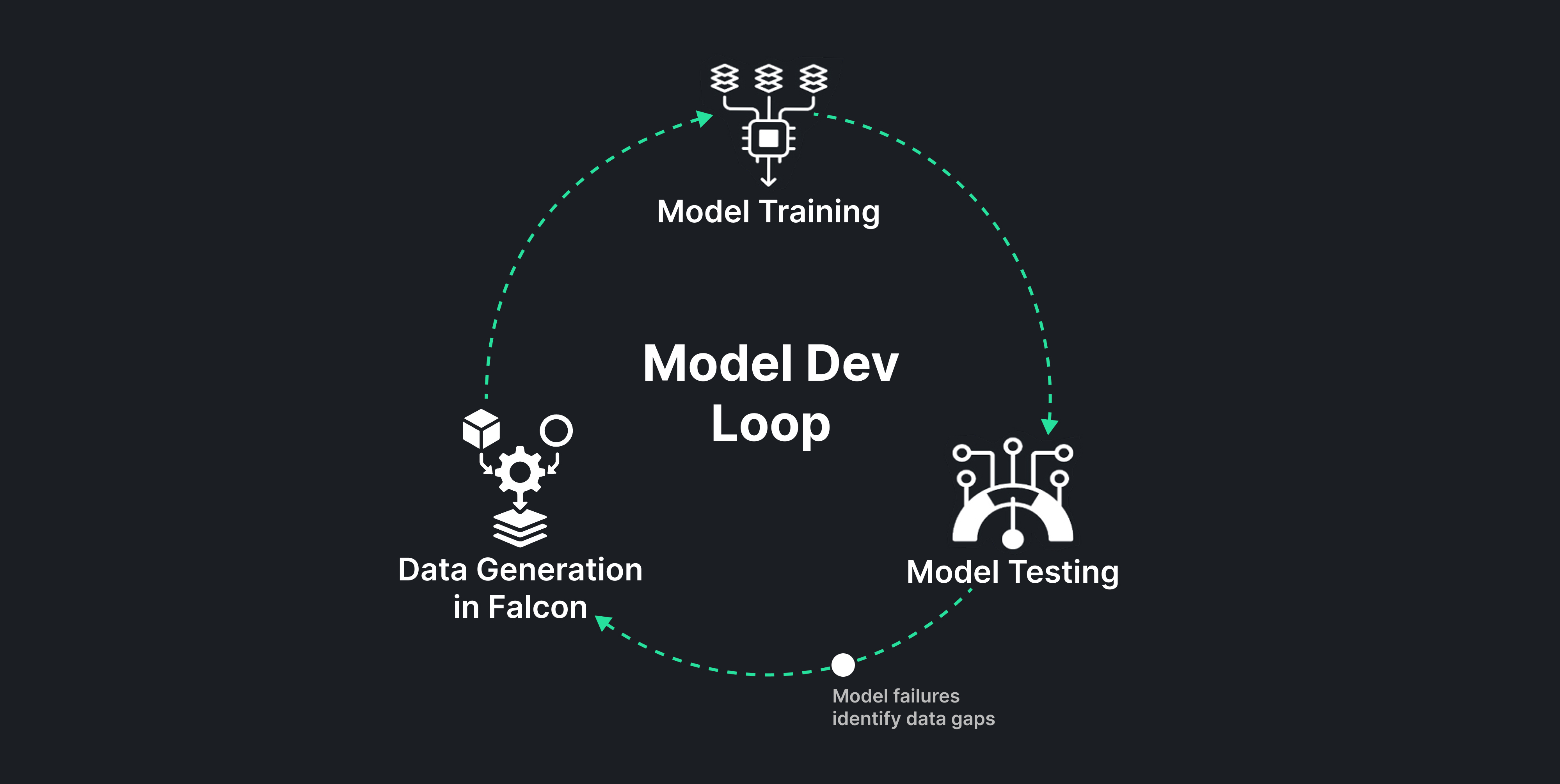

3. Living Datasets: Integrating Synthetic Data Generation Into Your Model Training Loop

From the beginning, our goal has been to help our users get the data their AI models need without requiring them to be simulation or 3D experts — to make the benefits of digital twin synthetic data accessible to everyone. Whether you’re training a drone detector, testing off-road autonomy, or refining a manufacturing inspection model, our users should be able to generate the right data on demand — not spend weeks configuring a simulation pipeline.

Today we’re introducing Living Datasets: a new way to create, manage, and evolve your data in Falcon. Traditional datasets are static snapshots — fixed collections of data that can quickly lose relevance as models and project goals evolve. Living Datasets, true to their name, enable intuitive dataset evolution. Instead of a one-and-done data export, a living dataset is a dynamic workflow: a combination of diverse, scenario-relevant parameters that allows users to continuously generate, refine, and expand their datasets over time.

This iterative nature is crucial for enabling a model development cycle that systematically identifies and closes any data gaps. Each iteration can introduce new variations or parameter adjustments, producing targeted synthetic data that closes gaps discovered in the model development process.

This living structure also unlocks reproducibility and traceability vital for iterative AI model building. Every Living Dataset includes a manifest recording exact engine versions, scenario logic, and parameter values used to create the data. That means users can deterministically regenerate a dataset months or years later, or isolate the effect of changing a single variable.

Every living dataset is designed for flexibility and collaboration — and to be an evergreen tool for the entire team: as needs evolve, parameters are adjusted, and dataset evolve too, making it easy to adjust for new model requirements or experimental conditions without rebuilding an entire pipeline. With this release, we’ve included tutorials that walk through this process step-by-step so users can start creating their own living datasets today.

This is only the beginning — future releases will introduce substantial updates for agent-based workflows, combining the strengths of generative AI and digital twin simulation for iterative, generalized, and frictionless synthetic data generation.

4. UV-Based Segmentation for Fine-Grained Annotation and Advanced Perception Training

Falcon has always supported pixel-perfect segmentation necessary for training perception systems. But prior to Falcon 5.4, segmentation operated at the mesh level: objects could only be segmented based on their underlying geometry. If a vehicle’s chassis, bumper, and license plate were all part of the same mesh, they would appear as a single class in the segmentation output, no matter how visually distinct they were.

Fine-Grained Control for Complex Objects

Falcon 5.4 introduces UV-Based Segmentation, a new capability that enables UV-level segmentation for precise, fine-grained labeling of object components. Instead of being limited by mesh boundaries, users can now segment any part of an object using custom textures authored on its UV map.

To make this possible, we gave Falcon’s Capture Sensor the ability to read the UV image of any digital twin. Falcon users can leverage this functionality by creating a special segmentation texture that uses the same UV coordinates that a twin’s mesh uses at runtime. Going back to the simple example of a vehicle, the license plate can now be segmented separately, using its own color, even if it’s not a standalone mesh. For more complex, detailed assets, multiple colors can be used to encode dozens of fine-grained components.

5. Containerized FalconSim Deployment: Portable, Scalable Simulation for ML Pipelines

Falcon 5.4 introduces a fully containerized FalconSim, making simulation easy to deploy across a wide range of environments. By packaging FalconSim in a secure Docker image, teams no longer need to manage installations or dependencies, and can run FalconSim consistently on Unix systems including multiple versions of Ubuntu, Red Hat, and Amazon Linux.

Containerization also makes FalconSim MLOps-ready. It integrates naturally into container-based workflows such as CI/CD, automated dataset generation, and batch simulation jobs. This gives teams a reproducible, scalable way to generate synthetic data or validate models at any point in their pipeline.

6. Catalog Updates: New Digital Twins Available for All Simulations

Our catalog of sim-ready. free-to-use, publicly available digital twins is always expanding. Here is a small sample of recently added site twins — digital twins of real-world locations, generated via our AI-powered GIS pipeline:

Fault Point, Utah

Oxley Peak, Nevada

Modoc Point, Oregon

Rodgers Peak, Yosemite, California

Mount Christie, Olympic Mountains, Washington

Nellie Creek, Henson, Colorado

Lost Lake, Eldora, Colorado

Scotch Bonnet Mountain,Montana